Late night. After a long period of silence, Black Forest Labs has released their latest model, Flux.2.

Friends who work with AI art generation or workflows will certainly be familiar with this. When Flux first debuted, it was once the top star in the art generation world, single-handedly raising the quality bar for open-source AI art generation models. Its Kontext contextual image editing capability also pioneered what image editing is today. However, the long-awaited Flux.2 doesn’t seem to shine as brightly as it once did. Especially with the recent explosive release of Google’s NanobananaPro, it seems no one is paying attention to this quietly open-sourced Flux hiding in the corner. What has become of this former king of open-source models?

First, let’s look at what updates Flux.2 brings.

01 Flux.2’s Update Content

① Multi-reference support First is this feature: it can simultaneously reference up to 10 images, providing the best character/product/style consistency available today.

Case 1 Prompt: Combine elements from multiple images while maintaining identity consistency in complex scenes. Create advertising variants with consistent faces, product models presented in any environment, or fashion spreads with consistent models.

Case 2 Prompt: Fuse multiple elements into one scene

To be honest, this reference capability is slightly better than NanobananaPro’s. But not by much…

② Image detail and photorealism Flux.2 has higher detail, clearer textures, and more stable lighting than version 1, suitable for product photography, visualization, and photography-like use cases.

But honestly, texture quality has become commonplace. Google’s models have already reached the point where you can’t tell reality from AI…

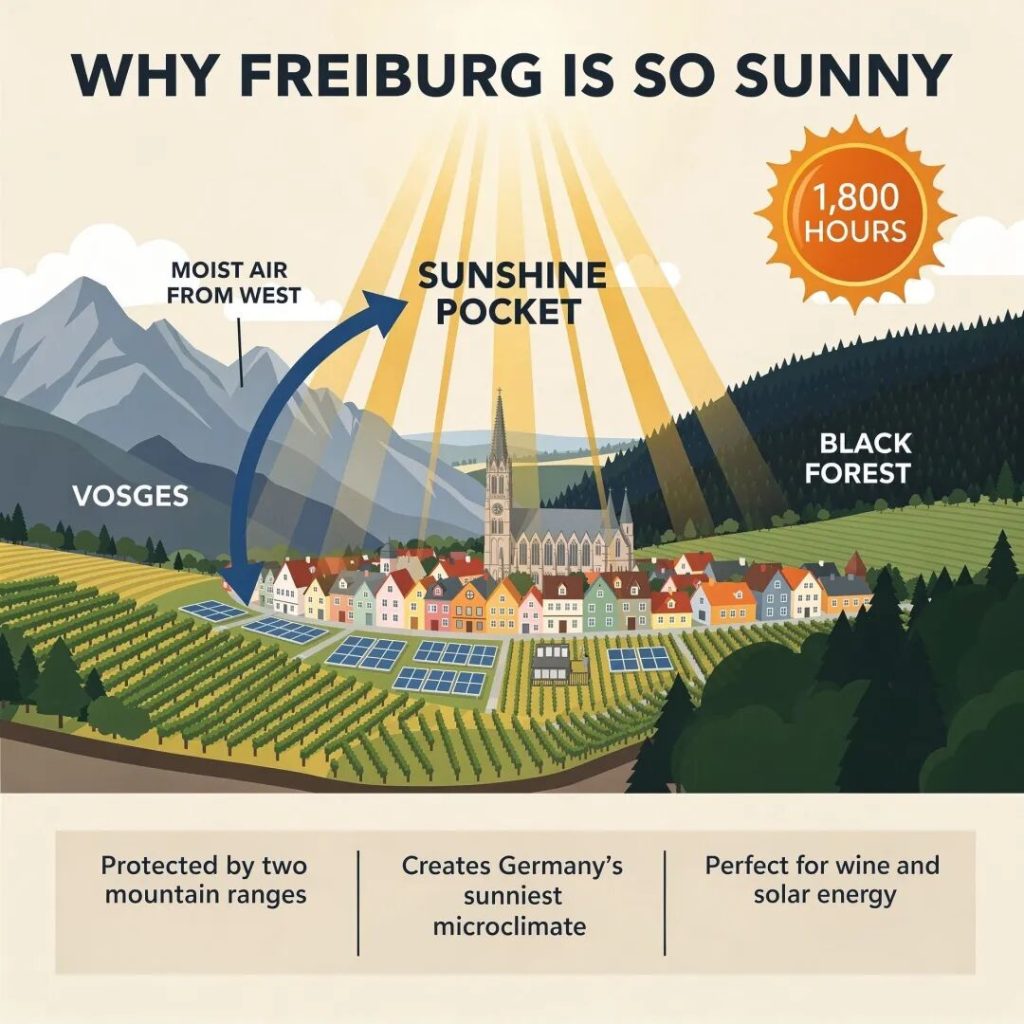

③ Text rendering Complex typography, infographics, memes, and readable small text in UI prototypes now work reliably in production environments.

⑤ World knowledge This is actually the most noteworthy point – more realistic world knowledge, lighting, and spatial logic, resulting in more coherent scenes that behave as expected. This means generated images will better understand their environment and spatial context.

These are Flux.2’s upgrades: higher image quality, better prompt following, text layout capabilities, and world knowledge.

Then the models:

- FLUX.2 [pro]: This is Flux.2’s most powerful model, not open source.

- FLUX.2 [flex]: Top-tier image quality with faster speed, not open source.

- FLUX.2 [dev]: 32B open weights model, derived from the FLUX.2 base model, this one is open source and can be downloaded on Hugging Face, deployable on local consumer-grade GPUs. The community has fp8 versions.

- FLUX.2 [klein] (coming soon): Open source Apache 2.0 model, distilled from the FLUX.2 base model for smaller size.

- FLUX.2 – VAE: A new variational autoencoder for latent representation, providing an optimized trade-off between learnability, quality, and compression rate.

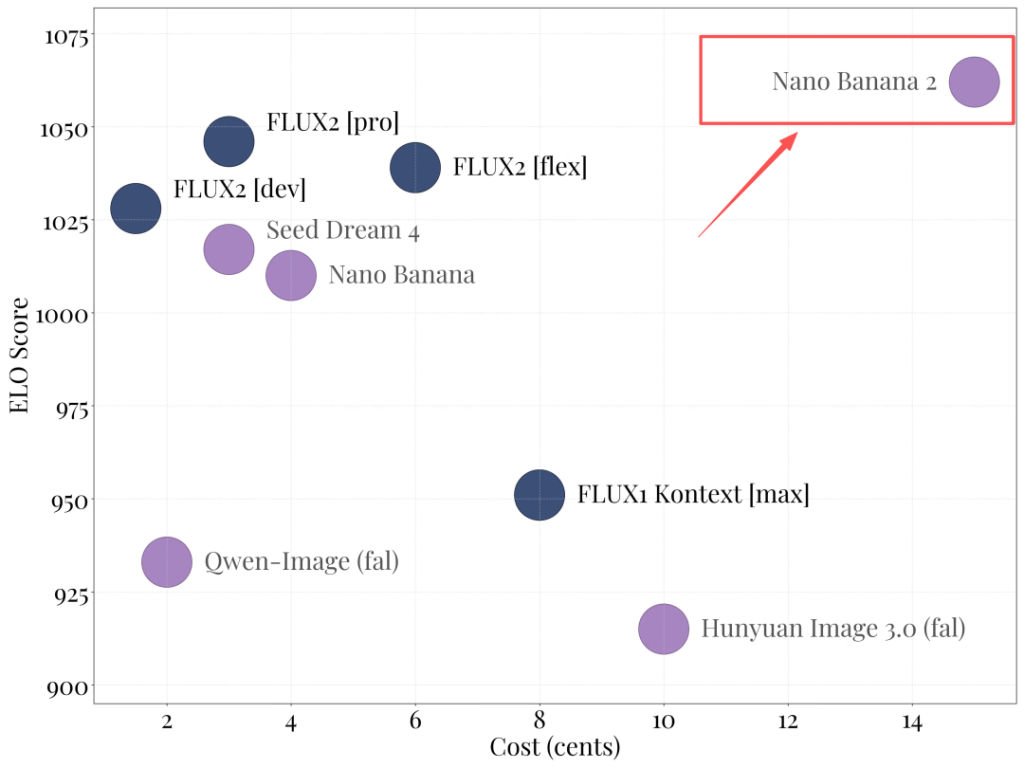

API pricing follows: This is Flux.2’s update content. The official documentation frankly acknowledges placing NanobananaPro at the top (both cost and scores are highest). Among open-source models, Flux.2 is indeed number one, also surpassing Seed Dream4 (Doubao).

After seeing all this, let’s compare it with Google’s NanobananaPro.

02 Comparison with NanobananaPro

First, online comparison tests (no evaluation from me):

① A full-body shot of a woman wearing a black top, denim shorts, and brown leather boots standing in a museum, with realistic lighting and details ② A close-up of a real female model’s face, with realistic lighting and delicate skin pore texture

Then image editing capabilities: Merge the subjects from the following images into one picture.

① Prompt: The person from image 1 pets the cat from image 2, with the bird from image 3 standing beside the cat Flux.2 Pro vs NanobananaPro

② Change the glove color in image 1 to match image 2’s color Flux.2 Pro vs NanobananaPro

③ Prompt: Match the pose reference from image 2 for the person in image 1 Flux.2 Pro vs NanobananaPro

The gap is clearly visible here. In Flux.2 Pro’s generated image, the chair has been removed, and the foot generation clearly doesn’t match the pose reference – it should be left foot forward, right foot back, but Flux generated both legs squeezed together. Google’s NanobananaPro, however, perfectly preserved the original chair and also controlled the leg posture very well.

So, ultimately, a new king has ascended to the throne…

03 Final thoughts

I still vividly remember when the Black Forest team separated from Stable Diffusion and resolutely launched Flux. It split open a brilliant starry sky in the long night of AI art generation. That was an era when open-source models dominated closed-source ones.

But so much time has passed since then – round after round of model updates and iterations, and closed-source models have returned to the throne. They just can’t compete… Closed-source models mean commercial revenue – money. Cold, hard cash means computing power support, plus the training data from so many users. How can such a small laboratory compete with the giant that is Google?

But I can’t help but feel respect for Black Forest. They’ve been steadfastly holding onto their open-source dream, quietly contributing to the open-source community from the corner. This is the romance that belongs to open-source models.